updated 2024-11-18

Q: Is Unicode a 16-bit encoding?

A: No. The first version of Unicode was a 16-bit encoding, from 1991 to 1995, but starting with Unicode 2.0 (July, 1996), it includes 24 {“21”?} and 32-bit encoding also – realizing that it was almost the same as utf8. Finally, in May of 2019 Microsoft caved in and adopted UTF8.

UTF-8 support: Windows fonts can display UTF-8 characters and are designed to handle a wide range of Unicode characters.

While Windows 11 can display UTF-8 characters and supports most Unicode characters through its fonts, the native encoding for the Windows system itself is still primarily UTF-16; meaning the system internally handles text using UTF-16, not UTF-8 directly, although Microsoft is increasingly recommending using UTF-8 in applications where possible, especially for newer development projects.

Windows started by supporting 16 bit Unicode (UTF-16). It wasn’t until the May 2019 update of Windows 10 (version 1903) that Microsoft fully embraced UTF-8 as the preferred encoding in its API, effectively making fonts “UTF-8” in Windows for most practical purposes; meaning developers were encouraged to use UTF-8 for better internationalization across platforms.

“Use the UTF-8 code page”

– Microsoft.com

06/21/2023 : https://docs.Microsoft.com/en-us/windows/apps/design/globalizing/use-utf8-code-page

“Use UTF-8 character encoding for optimal compatibility between web apps and other *nix-based platforms (Unix, Linux, and variants), minimize localization bugs, and reduce testing overhead.”

– Microsoft

Microsoft:

“go to Windows Settings > Time & language > Language & region > Administrative language settings > Change system locale, and check Beta: Use Unicode UTF-8 for worldwide language support.

Then reboot the PC for the change to take effect.

2024-02-17

Of the top twenty-five websites in the world … only two aren’t running Linux. Those two, live.com and bing.com, both belong to Microsoft. Everyone else, Yahoo, eBay, Twitter, etc., etc., are running Linux.

98.1 percent of the top 1 million web servers are running Linux-unix. The remainder is Windows, 1.9 percent

social networks:

“Number one? Facebook with, naturally, Linux. Then, in this order, Pinterest, Twitter, StumbleUpon, Reddit, Google+, LinkedIn, and YouTube. And, what to they run? I’ll give you three guesses and the first two don’t count. It’s Linux of course.”see truelist.co/blog/linux-statistics/

MS-DOS:

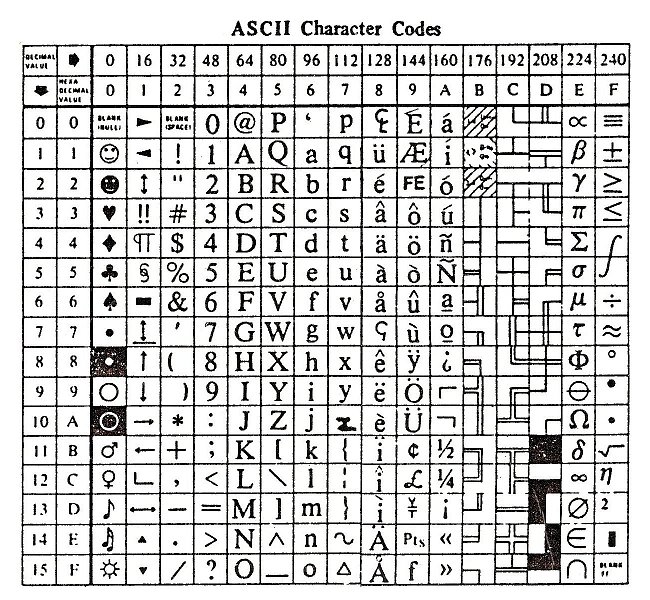

IBM PC or MS-DOS Codepage 437, often abbreviated to CP437 and also known as DOS-US or OEM-US, is the original character set of the IBM PC, circa 1981.

the title: “ASCII Character Codes” is deceptive, the 2nd half is NOT ascii, and was changed in Windows.

The repertoire of CP437 was taken from the character set of Wang word-processing machines, as explicitly admitted by Bill Gates in the interview of him and Paul Allen in the 2nd of October 1995 edition of Fortune Magazine:

“… we were also fascinated by dedicated word processors from Wang, because we believed that general-purpose machines could do that just as well. That’s why, when it came time to design the keyboard for the IBM PC, we put the funny Wang character set into the machine–you know, smiley faces and boxes and triangles and stuff. We were thinking we’d like to do a clone of Wang word-processing software someday.”

Windows-1252

Windows-1252 was the first default character set in Microsoft Windows. It was the most popular character set in Windows from 1985 to 1990. Windows-1252 or CP-1252 (code page 1252) … for English and many European languages including Spanish, French, and German.

UTF = Unicode Transformation Format

UTF-8 vs. UTF-16 and UTF-32

UTF-8

“UTF-8 is the most common character encoding used in HTML documents on the World Wide Web.”

UTF-8 was developed by Unix luminaries Ken Thompson and Rob Pike, specifically to support arbitrary language characters on Unix-like systems

The {other} filesystem[s] is[are] also not flexible in another way: There’s no mechanism to find out what encoding is used on a given filesystem. If [strange characters show up in] a given filename, there’s no obvious way to find out what encoding they used. In theory, you could store the encoding system with the filename, and then use multiple system calls to find out what encoding was used for each name.. but really, who needs that kind of complexity?!?

If you want to store arbitrary language characters in filenames using todays’ Unix/Linux/POSIX filesystem, the only widely-used answer that “simply works” for all languages is UTF-8. Wikipedia’s UTF-8 entry and Markus Kuhn’s UTF-8 and Unicode FAQ (at the University of Cambridge, MA) have more information about UTF-8. UTF-8 was developed by Unix luminaries Ken Thompson and Rob Pike, specifically to support arbitrary language characters on Unix-like systems, and it’s widely acknowledged to have a great design.

Unicode defines an adequate character set but an unreasonable representation. The Unicode standard states that all characters are 16 bits wide and are communicated and stored in 16-bit units. It also reserves a pair of characters (hexadecimal FFFE and FEFF) to detect byte order in transmitted text, requiring state in the byte stream. (The Unicode committee was thinking of files, not pipes.) To adopt Unicode, we would have had to convert all text going into and out of Plan 9 between ASCII and Unicode, which cannot be done. Within a single program, in command of all its input and output, it is possible to define characters as 16-bit quantities; in the context of a networked system with hundreds of applications on diverse machines by different manufacturers, it is impossible

– Ken Thompson

The problems of filenames in Unix/Linux/POSIX are particularly jarring in part because there are so many other things in POSIX systems that are well-designed. In contrast, Microsoft Windows has a legion of design problems, often caused by its legacy, that will probably be harder to fix over time. These include its irregular filesystem rules that are also a problem yet will be harder to fix (so that “c:\stuff\com1.txt” refers to the COM1 serial port, not to a file), its distinction between binary and text files *, its monolithic design, and the Windows registry.

The Windows character set is often called “ANSI character set” or “8-bit ASCII” or “ASCII-8”, but this is seriously misleading. It has not been approved by ANSI. (Historical background: Microsoft based the design of the set on a draft for an ANSI standard. A glossary by Microsoft explicitly admits this.)

IETF Policy on Character Sets and Languages (RFC 2277) clearly favors UTF-8. It requires support to it in Internet protocols

Note that UTF-8 is efficient, if the data consists dominantly of ASCII characters with just a few “special characters” in addition to them, and reasonably efficient for dominantly ISO Latin 1 text.

(In Unicode terminology, “abstract character” is a character as an element of a character repertoire, whereas “character” refers to “coded character representation”, which effectively means a code value. It would be natural to assume that the opposite of an abstract character is a concrete character, as something that actual appears in some physical form on paper or screen; but oh no, the Unicode concept “character” is more concrete than an “abstract character” only in the sense that it has a fixed code position! An actual physical form of an abstract character, with a specific shape and size, is a glyph. Confusing, isn’t it?)

A glyph – a visual appearance

It is important to distinguish the character concept from the glyph concept. A glyph is a presentation of a particular shape which a character may have when rendered or displayed

http://www.cs.tut.fi/~jkorpela/chars.html

2003:

Red Hat Linux 8.0 (September 2002) was the first distribution to take the leap of switching to UTF-8 as the default encoding for most locales. The only exceptions were Chinese/Japanese/Korean locales, for which there were at the time still too many specialized tools available that did not yet support UTF-8. This first mass deployment of UTF-8 under Linux caused most remaining issues to be ironed out rather quickly during 2003. SuSE Linux then switched its default locales to UTF-8 as well, as of version 9.1 (May 2004). It was followed by Ubuntu Linux, the first Debian-derivative that switched to UTF-8 as the system-wide default encoding. With the migration of the three most popular Linux distributions, UTF-8 related bugs have now [2008] been fixed in practically all well-maintained Linux tools.

UTF-8 (8-bit+) is a multibyte character encoding for Unicode. UTF-8 is like UTF-16 and UTF-32, because it can represent every character in the Unicode character set. But unlike UTF-16 and UTF-32, it possesses the advantages of being backward-compatible with ASCII, and it has the advantage of avoiding the complications of endianness and the resulting need to use byte order marks (BOM). For these and other reasons, UTF-8 has become the dominant character encoding for the World-Wide Web, accounting for more than half of all Web pages. [94% by 2018] The Internet Engineering Task Force (IETF) requires all Internet protocols to identify the encoding used for character data, and the supported character encodings must include UTF-8. The Internet Mail Consortium (IMC) recommends that all e‑mail programs be able to display and create mail using UTF-8. UTF-8 is also increasingly being used as the default character encoding in operating systems, programming languages, APIs, and software applications.

Advantages

The ASCII characters are represented by themselves as single bytes that do not appear anywhere else, which makes UTF-8 work with the majority of existing APIs that take bytes strings but only treat a small number of ASCII codes specially. This removes the need to write a new Unicode version of every API, and makes it much easier to convert existing systems to UTF-8 than any other Unicode encoding.

UTF-8 is the only encoding for XML entities that does not require a BOM or an indication of the encoding.

UTF-8 and UTF-16 are the standard encodings for Unicode text in HTML documents, with UTF-8 as the preferred and most used encoding.

UTF-8 strings can be fairly reliably recognized as such by a simple heuristic algorithm.[23] The chance of a random string of bytes being valid UTF-8 and not pure ASCII is 3.9% for a two-byte sequence, 0.41% for a three-byte sequence and 0.026% for a four-byte sequence.[24] ISO/IEC 8859-1 is even less likely to be mis-recognized as UTF-8: the only non-ASCII characters in it would have to be in sequences starting with either an accented letter or the multiplication symbol and ending with a symbol. This is an advantage that most other encodings do not have, causing errors (mojibake) if the receiving application isn’t told and can’t guess the correct encoding. Even UTF-16 can be mistaken for other encodings (like in the bush hid the facts bug).

Sorting of UTF-8 strings as arrays of unsigned bytes will produce the same results as sorting them based on Unicode code points.

UTF-8 can encode any Unicode character, avoiding the need to figure out and set a “code page” or otherwise indicate what character set is in use, and allowing output in multiple languages at the same time. For many languages there has been more than one single-byte encoding in usage, so even knowing the language was insufficient information to display it correctly.

UTF-8 can encode any Unicode character. Files in different languages can be displayed correctly without having to choose the correct code page or font. For instance Chinese and Arabic can be in the same text without special codes inserted to switch the encoding.

UTF-8 is “self-synchronizing”: character boundaries are easily found when searching either forwards or backwards. If bytes are lost due to error or corruption, one can always locate the beginning of the next character and thus limit the damage. Many multi-byte encodings are much harder to resynchronize.

Any byte oriented string searching algorithm can be used with UTF-8 data, since the sequence of bytes for a character cannot occur anywhere else. Some older variable-length encodings (such as Shift JIS) did not have this property and thus made string-matching algorithms rather complicated.

The Quick Brown Fox… and other Pangrams

These were traditionally used in typewriter instruction.

A sentence with every letter of the English/[Latin] alphabet

English: The quick brown fox jumps over the lazy dog.

To quote the unicode website there are at least 61 different languages supported. – 2023

also:

“The Unicode Standard encodes scripts rather than languages per se.” …